Key Concepts

- 🔧 Tools - Tools are functions (with some extra niceties) that can be called and do something. That's it!

- 🤖 Agents - Agents are tools that use LLMS. Different kinds of agents can call other tools, which might be agents themselves!

- IterativeAgents - IterativeAgents are multi-shot agents that can repeatedly call an LLM to try and perform its task, where the agent can identify when it is complete with its task.

- 🧰 BackendAgents - BackendAgents are agents that utilize a Backend to perform its task.

- 💬 Chats - Chats are agents that interact with a user over a prolonged interaction in some way, and can be pair with tools, backends, and other agents.

- Backends - Backends are systems that empower an LLM to utilize tools and detect when it is finished with its task. You probably won't need to worry about them!

- 📦 Connectors - Connectors are systems that can trigger your agents in a configurable manner. Want a web server for your agents? Or want your agent firing off every hour? arkaine has you covered.

- Context - Context provides thread-safe state across tools. No matter how complicated your workflow gets by plugging agents into agents, contexts will keep track of everything.

Installation

To install arkaine, ensure you have Python 3.8 or higher installed. Then, you can install the package using pip:

pip install arkaine

Examples

Check out the examples for some hands on mini projects walking you through much of arkaine - though these docs here are great for a complete walkthrough.

Creating Your Own Tools and Agents

Creating a Tool

There are several ways to create a tool. You can do this through inheritance, a function call, or a decorator. Let's cover each.

Just using Tool

First, we can just implement a tool by calling it.

from arkaine.tools.tool import Tool my_tool = Tool( name="my_tool", description="A custom tool", args=[Argument("name", "The name of the person to greet", "str", required=True)], func=lambda context, kwargs: f"Hello, {kwargs['name']}!", ) my_tool({"name": "Jeeves"})

Inheriting from Tool

Second, we can define a class that inherits from the Tool class. Implement the required methods and define the arguments it will accept.

python from arkaine.tools.tool import Tool, Argument class MyTool(Tool): def __init__(self): args = [ Argument("input", "The input data for the tool", "str", required=True) ] super().__init__("my_tool", "A custom tool", args, self._my_func) def _my_func(self, context, kwargs): # Implement the tool's functionality here return f"The meaning of life is {kwargs['input']}" my_tool(42)

By default, the model calls invoke internally, which in turn calls the passed func argument. So you can either act as above, or instead override invoke. You do lose some additiona parameter checking that is useful, however, and thus it is not recommended.

toolify decorator

Since Tools are essentially functions with built-in niceties for arkaine integration, you may want to simply quickly turn an existing function in your project into a Tool. To do this, arkaine contains toolify.

from arkaine.tools import toolify @toolify def func(name: str, age: Optional[int] = None) -> str: """ Formats a greeting for a person. name -- The person's name age -- The person's age (optional) returns -- A formatted greeting """ return f"Hello {name}!" @toolify def func2(text: str, times: int = 1) -> str: """ Repeats text a specified number of times. Args: text: The text to repeat times: Number of times to repeat the text Returns: The repeated text """ return text * times def func3(a: int, b: int) -> int: """ Adds two numbers together :param a: The first number to add :param b: The second number to add :return: The sum of the two numbers """ return a + b func3 = toolify(func3)

docstring scanning

Not only will toolify turn func1/2/3 into a Tool, it also attempts to read the type hints and documentation to create a fully fleshed out tool for you, so you don't have to rewrite descriptions or argument explainers.

Creating an Agent

To create an agent, you have several options. All agents are tools that utilize LLMs, and there are a few different ways to implement them based on your needs.

In order to create an agent, you generally need to provide:

- An explanation for what the overall goal of the agent is (and how to accomplish it) and...

- A method to take the output from the LLM and extract a result from it.

Using SimpleAgent

The easiest way to create an agent is to use SimpleAgent, which allows you to create an agent by passing functions for prompt preparation and result extraction:

from arkaine.tools.agent import SimpleAgent from arkaine.tools.tool import Argument from arkaine.llms.llm import LLM # Create agent with functions agent = SimpleAgent( name="my_agent", description="A custom agent", args=[Argument("task", "The task description", "str", required=True)], llm=my_llm, prepare_prompt=lambda context, **kwargs: f"Perform the following task: {kwargs['task']}", extract_result=lambda context, output: output.strip() )

Inheriting from Agent

For more complex agents, you can inherit from the Agent class. Implement the required prepare_prompt and extract_result methods:

from arkaine.tools.agent import Agent class MyAgent(Agent): def __init__(self, llm: LLM): args = [ Argument("task", "The task description", "str", required=True) ] super().__init__("my_agent", "A custom agent", args, llm) def prepare_prompt(self, context, **kwargs) -> Prompt: """ Given the arguments for the agent, create the prompt to feed to the LLM for execution. """ return f"Perform the following task: {kwargs['task']}" def extract_result(self, context, output: str) -> Optional[Any]: """ Given the output of the LLM, extract and optionally transform the result. Return None if no valid result could be extracted. """ return output.strip()

Creating IterativeAgents

IterativeAgents are agents that can repeatedly call an LLM to try and perform its task, where the agent can identify when it is complete with its task by returning a non-None value from extract_result. To create one, inherit from the IterativeAgent class:

from arkaine.tools.agent import IterativeAgent class MyIterativeAgent(IterativeAgent): def __init__(self, llm: LLM): super().__init__( name="my_iterative_agent", description="A custom iterative agent", args=[], llm=llm, initial_state={"attempts": 0}, # Optional initial state max_steps=5 # Optional maximum iterations ) def prepare_prompt(self, context, **kwargs) -> Prompt: attempts = context["attempts"] context["attempts"] += 1 return f"Attempt {attempts}: Perform the following task: {kwargs['task']}" def extract_result(self, context, output: str) -> Optional[Any]: # Return None to continue iteration, or a value to complete if "COMPLETE" in output: return output return None

The key differences in IterativeAgent are:

- You can provide

initial_stateto set up context variables - You can set

max_stepsto limit the number of iterations - Returning

Nonefromextract_resultwill cause another iteration - The agent continues until either a non-None result is returned or

max_stepsis reached

You can optionally pass an initial state when implementing your IterativeAgent. This is a dictionary of key-value pairs that will be used to initialize the context of the agent, allowing you to utilize the context to handle state throughout the prepare_prompt and extract_result methods.

Chats

Chats are assumed to inherit from the Chat abstract class. They follow some pattern of interaction with the user. Chats create Conversations - these are histories of messages shared between 2 or more entities - typically the user or the agent, but not necessarily limited to this scope. The Chat class includes the ability to determine whether an incoming message is a new conversation, or a continuation of the previous conversation.

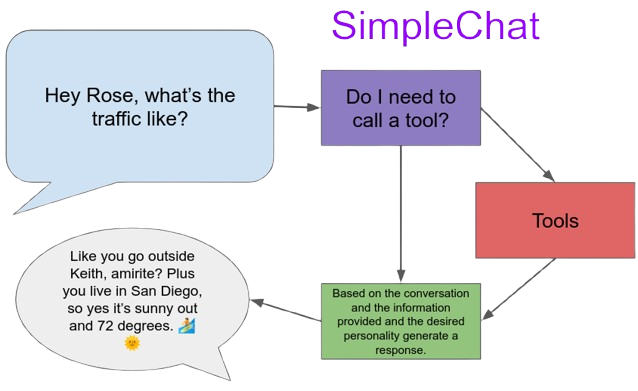

SimpleChat

The SimpleChat class is currently the sole implementation, though more are planned. It is deemed "simple" as it only supports the pattern of one user to one agent in a typical user message to agent response pattern.

- Multiple conversations with isolated histories

- Tool/agent integration for enhanced capabilities

- Conversation persistence

- Custom agent personalities

- Multiple LLM backends

Basic Usage

Here's a simple example of creating and using SimpleChat:

from arkaine.chat.simple import SimpleChat from arkaine.chat.conversation import FileConversationStore from arkaine.llms.openai import OpenAI # Initialize components llm = OpenAI() store = FileConversationStore("path/to/store") tools = [tool1, agent1] # Create chat instance chat = SimpleChat( llm=llm, tools=tools, store=store, agent_name="Rose", # Optional, defaults to "Arkaine" user_name="Abigail", # Optional, defaults to "User" ) while True: msg = input("Abigail: ") if msg.lower() in ["quit", "exit"]: break response = chat(message=msg) print(f"Rose: {response}")

Advanced Usage

SimpleChat can be customized with different backends, personalities, and tool configurations:

personality- a brief sentence or so describing the prefered personality of the agent's responses.conversation_auto_active- if set, the chat will automatically continue a conversation if it has been within the specified time window of the prior conversation; otherwise, an LLM is asked to consider whether or not the current message belongs in a new conversation or the prior.

Tool Integration

SimpleChat can leverage tools to enhance its capabilities. When a user's message implies a task that could be handled by a tool, SimpleChat will automatically identify and use the appropriate tool.

It does this by asking an LLM to identify from the prior message and the context of prior messages in the conversation, paired with descriptions of the tools, if any "tasks" can be identified that could benefit from a tool. Once generated, each task is individually fed into a Backend.

BackendAgents

BackendAgents are agents that utilize a Backend to perform its task. A Backend is a system that empowers an LLM to utilize tools and detect when it is finished with its task.

A Backend handles:

- Formatting the tool's descriptions and arguments into a consumable format for the LLM.

- Calling the LLM and then parsing its response to determine if the model has called any tools.

- Parsing the tool calls and then...

- Calling in parallel each tool, and on return...

- Formatting the results of the tool calls into a consumable format for the LLM, repeating until...

- The LLM appropriately responds that it is complete.

To create one, inherit from the BackendAgent class.

You need two things for a BackendAgent:

- An agent_explanation, which is fed to the LLM through the backend's prompt to tell the LLM what it is expected to be.

- A method that, given the arguments, returns a dictionary of arguments for the backend. Almost always (unless the backend specifies otherwise) the expected format is:

{ "task": "..." }

...wherein task is a text that describes the individual task at hand.

from arkaine.tools.agent import BackendAgent class MyBackendAgent(BackendAgent): def __init__(self, backend: Backend): super().__init__("my_backend_agent", "A custom backend agent", [], backend) def prepare_for_backend(self, **kwargs): # Given the arguments for the agent, transform them # (if needed) for the backend's format. These will be # passed to the backend as arguments. question = kwargs["question"] return { "task": f"Answer the following question: {question}", }

Note that the prepare_for_backend method is optional. If you do not implement it, the backend agent will pass the arguments as-is to the backend.

Creating a Custom Backend

If you wish to create a custom backend, you have to implement several functions.

class MyBackend(BaseBackend): def __init__(self, llm: LLM, tools: List[Tool]): super().__init__(llm, tools) def parse_for_tool_calls(self, context: Context, text: str, stop_at_first_tool: bool = False) -> ToolCalls: # Given a response from a model, isolate any calls to tools ... return [] def parse_for_result(self, context: Context, text: str) -> Optional[Any]: # Given a response from a model, isolate any result. If a result # is provided, the backend will continue calling itself. ... return ? def tool_results_to_prompts(self, context: Context, prompt: Prompt, results: ToolResults) -> List[Prompt]: # Given the results of a tool call, transform them into a prompt # friendly format. ... return [] def prepare_prompt(self, context: Context, **kwargs) -> Prompt: # Given the arguments for the agent, create a prompt that tells # our BackendAgent what to do. ... return []

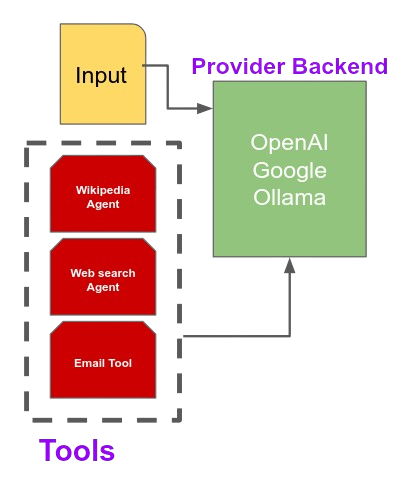

Choosing a Backend

When in doubt, trial and error works. You have the following backends available:

OpenAI- utilizes OpenAI's built in tool calling APIGoogle- utilizes Google Gemini's built in tool calling APIOllama- utilizes Ollama's built in tool calling API for models that support it - be sure to check the Ollama docs for more information.ReAct- a backend that utilizes the Thought/Action/Answer paradigm to call tools and think through tasks.PythonEnv- utilize python coding within a docker environment to safely execute LLM code with access to your tools to try and solve problems.

Provider Backends

Some providers of models have built in support for tool calling. As long as the API request is formatted correctly, the model will know how to call tools and has already been trained to utilize this.

In this instance, arkaine is just handling the formatting of the API request, but not much else in terms of selecting tools. arkaine still handles routing to tools correctly though.

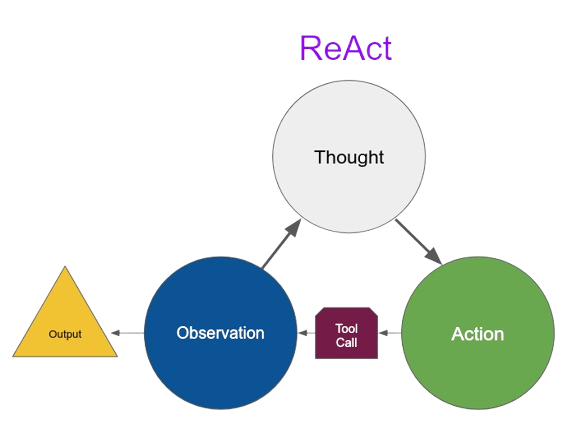

ReAct

ReAct is based on research, creating a paradigm of looping through Thought/Action/Answer. The model is instructed to complete a task, first outputting its thought, then either proving Action and Action Input tags, or replying with Answer. When the model replies with Answer, the backend's cycle is complete.

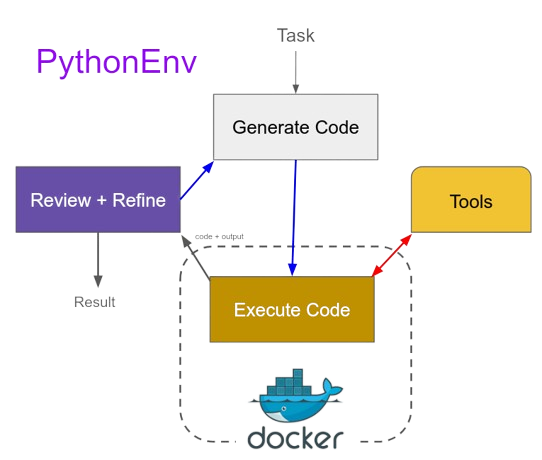

PythonEnv

Inspired by CodeActions, PythonEnv pairs the LLM model with a dockerized python environment, where it creates executable python code. Each tool is injected into the environment as individual python functions that reach out of the docker container to call the associated tool. The model is also informed as such. Files can be transferred into or out of the container as needed to control the level of isolation for the agent.

LLMs

Arkaine supports multiple integrations with different LLM interfaces:

- OpenAI

- Anthropic Claude

- Groq - cheap hosted offering of multiple open sourced models

- Ollama - local offline models supported!

- Google - utilizes Google's Gemini API

Expanding to other LLMs

Adding support to existing LLMs is easy - you merely need to implement the LLM interface. Here's an example:

from arkaine.llms.llm import LLM class MyLLM(LLM): def __init__(self, api_key: str): self.api_key = api_key def context_length(self) -> int: # Return the maximum number of tokens the model can handle. return 8192 def completion(self, prompt: Prompt) -> str: # Implement the LLM's functionality here return self.call_llm(prompt)

Often it is necessary to include the context limits of models so the context_length can be properly set.

Contexts, State, and You

All tools and agents are passed at execution time (when they are called) a Context object. The goal of the context object is to track tool state, be it the tool's specific state or its children. Similarly, it provides a number of helper functions to make it easier to work with tooling. All of a context's functionalities are thread safe.

Contexts are acyclic graphs with a single root node. Children can branch out, but ultimately return to the root node as execution completes.

Contexts track the progress, input, output, and possible exceptions of the tool and all sub tools. They can be saved (.save(filepath)) and loaded (.load(filepath)) for future reference.

Contexts are automatically created when you call your tool, but a blank one can be passed in as the first argument to all tools as well.

context = Context() my_tool(context, {"input": "some input"})

State Tracking

Contexts can track state for its own tool, temporary debug information, or provide overall tool state.

To track information within the execution of a tool (and only in that tool), you can access the context's thread safe state by using it like a dict.

context["your_variable"] = "some information" print(context["your_variable"])

To make working with this data in a threadsafe manner easier, arkaine provides additional functionality not found in a normal dict:

append- append a value to a list value contained within the contextconcat- concatenate a value to a string value contained within the contextincrement- increment a numeric value contained within the contextdecrement- decrement a numeric value contained within the contextupdate- update a value contained within the context using a function, allowing more complex operations to be performed atomically

This information is stored on the context it is accessed from.

Again, context contains information for its own state, but children context can not access this information (or vice versa).

context.x["your_variable"] = "it'd be neat if we just were nice to each other" print(context.x["your_variable"]) # it'd be neat if we just were nice to each other child_context = context.child_context() print(child_context.x["your_variable"]) # KeyError: 'your_variable'

Execution Level State

It may be possible that you want state to persist across the entire chain of contexts. arkaine considers this as "execution" state, which is not a part of any individual context, but the entire entity of all contexts for the given execution. This is useful for tracking state across multiple tools and being able to access it across children.

To utilize this, you can use .x on any Context object. Just as with the normal state, it is thread safe and provides all features.

context.x["your_variable"] = "robots are pretty cool" print(context.x["your_variable"]) # robots are pretty cool child_context = context.child_context() print(child_context.x["your_variable"]) # robots are pretty cool

Debug State

It may be necessary to report key information if you wish to debug the performance of a tool. To help this along, arkaine provides a debug state. Values are only written to it if the global context option of debug is set to true.

context.debug["your_variable"] = "robots are pretty cool" print(context.debug["your_variable"]) # KeyError: 'your_variable' from arkaine.options.context import ContextOptions ContextOptions.debug(True) context.debug["your_variable"] = "robots are pretty cool" print(context.debug["your_variable"]) # robots are pretty cool

Debug states are entirely contained within the context it is set to, like the base state.

Retrying Failed Contexts

Let's say you're developing a chain of tools and agents to create a complex behavior. Since we're possibly talking about multiple tools likely making web calls and multiple LLM calls, it may take a significant amount of time and compute to re-run everything from scratch. To help with this, you can save the context and call call retry(ctx) on its tool. It will utilize the same arguments, and call down to its children until it finds an incomplete or error'ed out context, and then pick up the re-run from that. You can thus skip re-running the entire chain if setup right.

Assuming that we have a tool my_tool, and we have a context for an incomplete execution, we can then:

ctx = my_tool.async_call({"input": "some input"}) # do other things ctx.wait() if ctx.exception: print("Retrying...") my_tool.retry(ctx) else: print(ctx.result)

By default, all tools' retry function just recalls the tool with the original arguments. Each flow tool has a custom retry method that considers its own execution progress. For instance, a linear flow tool will retry from the step that failed specifically, not re-running prior ones. A branch flow tool will retry the failed or incomplete branches, but not affect successfully completed branches.

Asynchronous Execution

You may want to trigger your tooling in a non-blocking manner. arkaine has you covered.

ctx = my_tool.async_call({"input": "some input"}) # do other things ctx.wait() print(ctx.result)

If you prefer futures, you can request a future from any context.

ctx = my_tool.async_call({"input": "some input"}) # do other things ctx.future().result()

Flow

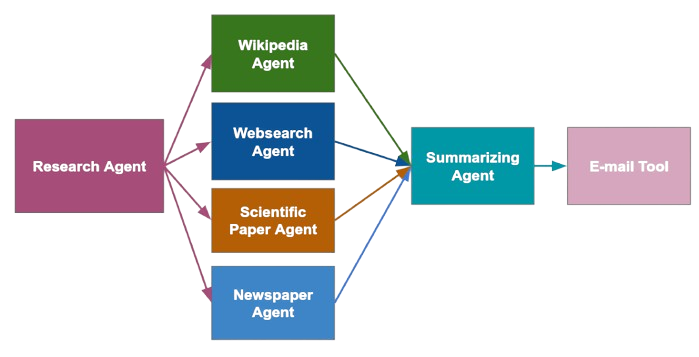

To build more complex behaviors, arkaine provides way to compose agents out of several other agents.

Agents can feed into other agents, but the flow of information between these agents can be complex! To make this easier, arkaine provides several flow tools that maintain observability and handles a lot of the complexity for you. When you use these flow tools, the result is a new tool/agent that you can call like any other agent. Thus very complex arkaine agents are actually several tools and agents connected via various flow tools.

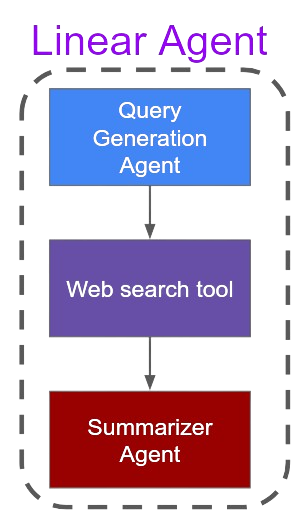

-Linear - A flow tool that will execute a set of agents in a linear fashion, feeding into one another.

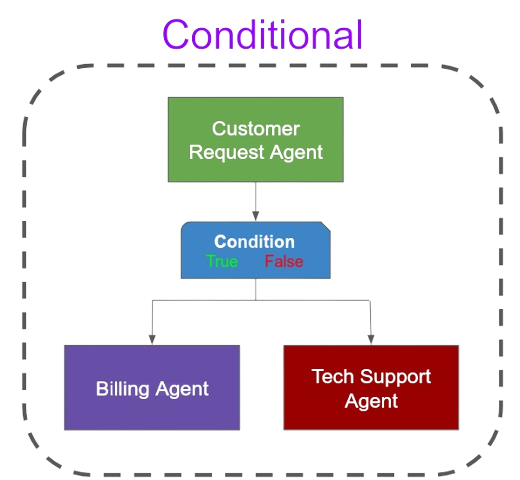

-Conditional - A flow tool that will execute a set of agents in a conditional fashion, allowing a branching of if/then/else logic.

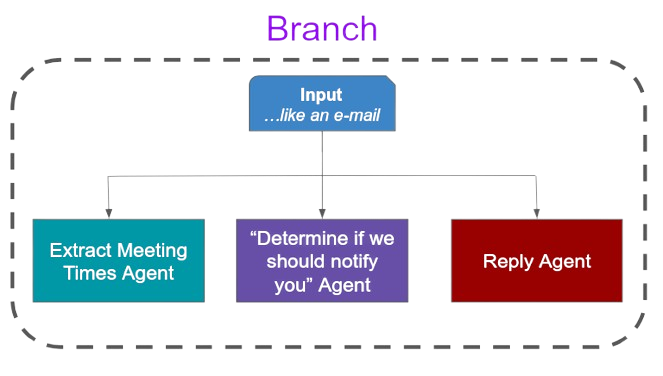

-Branch - A branch tool allows a singular input to be worked on by multiple agents at once. Given a singular input, execute in parallel multiple tools/agents and aggregate their results at the end.

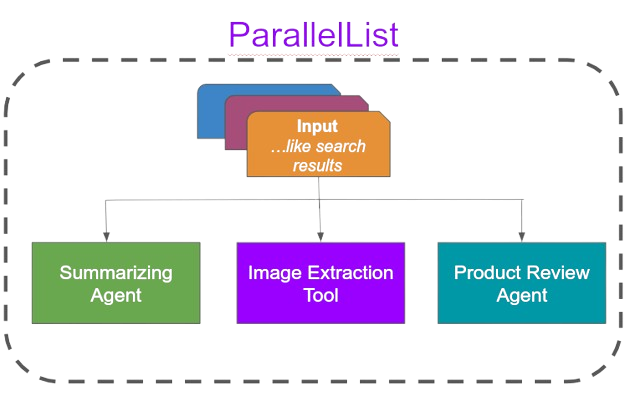

-ParallelList - Given a list of inputs, execute in parallel the same tool/agent and aggregate their results at the end.

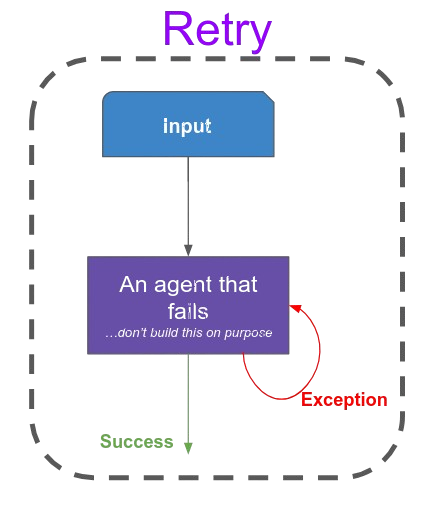

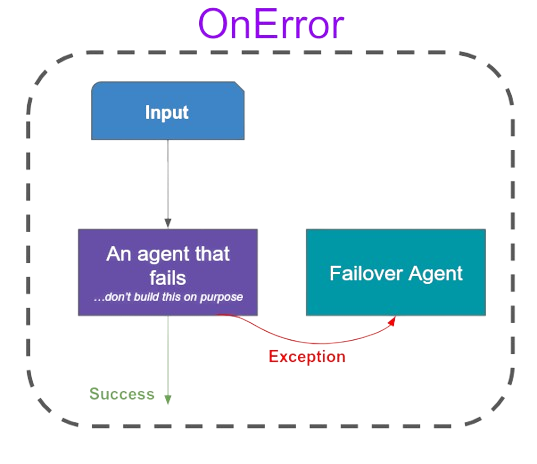

-Retry - Given a tool/agent, retry it until it succeeds or up to a set amount of attempts. Also provides a way to specify which exceptions to retry on.

-OnError - Given a tool agent, if an exception is thrown then execute a different tool/agent.

-FireAndForget - Given a tool/agent, execute it, but do not wait for its completion; immediately return. Useful on branching and conditional flows.

Linear

You can make tools out of the Linear tool, where you pass it a name, description, and a list of steps. Each step can be a tool, a function, or a lambda. - lambdas and functions are toolifyd into tools when created.

from arkaine.flow.linear import Linear def some_function(x: int) -> int: return str(x) + " is a number" my_linear_tool = Linear( name="my_linear_flow", description="A linear flow", steps=[ tool_1, lambda x: x**2, some_function, ... ], ) my_linear_tool({"x": 1})

Conditional

A Conditional tool is a tool that will execute a set of agents in a conditional fashion, allowing a branching of if->then/else logic. The then/otherwise attributes are the true/false branches respectively, and can be other tools or functions.

from arkaine.flow.conditional import Conditional my_tool = Conditional( name="my_conditional_flow", description="A conditional flow", args=[Argument("x", "An input value", "int", required=True)], condition=lambda x: x > 10, then=tool_1, otherwise=lambda x: x**2, ) my_tool(x=11)

Branch

A Branch tool is a tool that will execute a set of agents in a parallel fashion, allowing a branching from an input to multiple tools/agents.

from arkaine.flow.branch import Branch my_tool = Branch( name="my_branch_flow", description="A branch flow", args=[Argument("x", "An input value", "int", required=True)], tools=[tool_1, tool_2, ...], ) my_tool(11)

The output of each function can be formatted using the formatters attribute; it accepts a list of functions wherein the index of the function corresponds to the index of the associated tool.

By default, the branch assumes the all completion strategy (set using the completion_strategy attribute). This waits for all branches to complete. You also have access to any for the first, n for the first n, and majority for the majority of branches to complete.

Similarly, you can set an error_strategy on whether or not to fail on any exceptions amongst the children tools.

ParallelList

A ParallelList tool is a tool that will execute a singular tool across a list of inputs. These are fired off in parallel (with an optional max_workers setting).

from arkaine.flow.parallel_list import ParallelList @toolify def my_tool(x: int) -> int: return x**2 my_parallel_tool = ParallelList( tool=my_tool, ) my_tool([1, 2, 3])

If you have a need to format the items prior to being fed into the tool, you can use the item_formatter attribute, which runs against each input individually.

my_parallel_tool = ParallelList( tool=my_tool, item_formatter=lambda x: int(x), ) my_parallel_tool(["1", "2", "3"])

...and as before with Branch, you can set attributes for completion_strategy, completion_count, and error_strategy.

Retry

The Retry flow tool is a tool that will retry a tool/agent until it succeeds or up to a set amount of attempts, with an option to specify which exceptions to retry on.

from arkaine.flow.retry import Retry my_tool = ... my_resilient_tool = Retry( tool=my_tool, max_retries=3, delay=0.5, exceptions=[ValueError, TypeError], ) my_resilient_tool("hello world")

Toolbox

Since arkaine is trying to be a batteries-included framework, it comes with a set of tools that are ready to use that will hopefully expand soon. To that end we have the toolbox - a package of tools constantly growing to support more use cases and projects so that you can focus on what's important for your project.

For more information on the toolbox, see the toolbox page.

Connectors

It's one thing to get an agent to work, it's another to get it to work when you specifically want it to, or in reaction to something else. For this arkaine provides connectors - components that stand alone and accept your agents as tools, triggering them in a configurable manner.

View the connectors page for more information on current connectors.

quickstart function

There are plenty of cool features that you'll commonly want to use when building arkaine AI agents. To make it easy to get set up with most of them, the quickstart function is provided.

- Context storage configuration

- Logging setup

- Spellbook socket/server initialization

- Proper cleanup on program exit

Basic Usage

from arkaine.quickstart import quickstart # Basic setup with in-memory context storage done = quickstart() # When finished done()

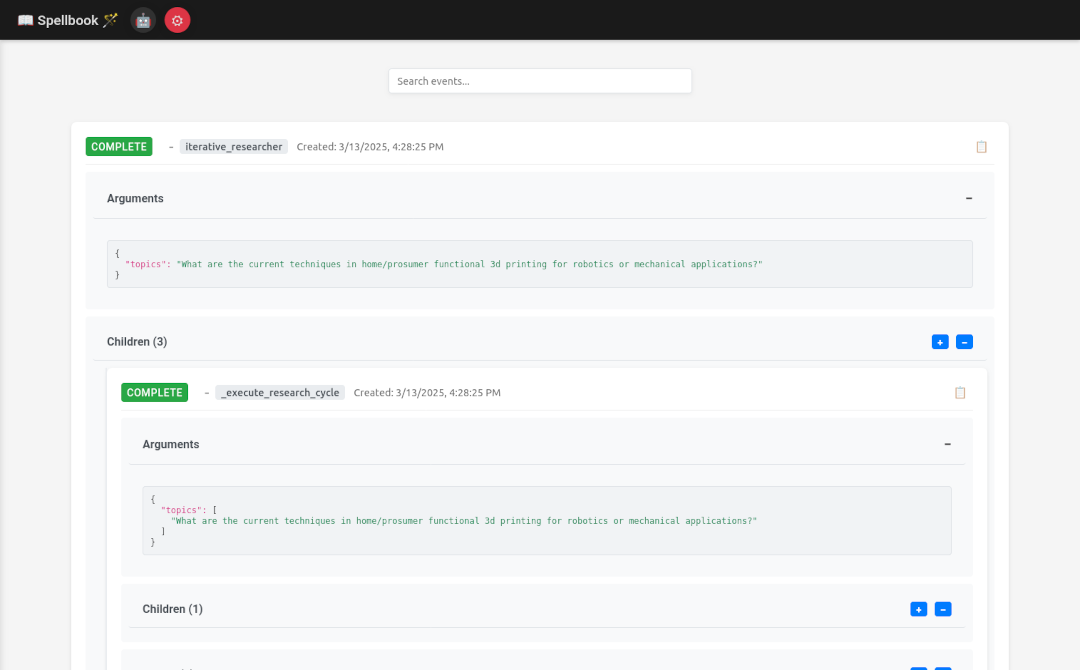

Spellbook

Spellbook is an in-browser tool for monitoring and debugging your agents as they act in real time.

To run it, you can use the following command:

spellbook

or

python -m arkaine.spellbook.server

Spellbook support can be added to your project by setting spellbook_socket=True in the quickstart function.

Configuration Options

The function accepts several optional parameters:

quickstart( context_store=None, # Context storage configuration logger=False, # Enable global logging spellbook_socket=False, # Spellbook socket configuration spellbook_server=False, # Spellbook server configuration ) -> Callable[[], None] # Returns cleanup function

Context Storage

You can configure context storage in several ways:

# Use in-memory storage (default) quickstart() # Use file-based storage with path quickstart(context_store="path/to/store") # Use custom context store from arkaine.utils.store.context import CustomContextStore quickstart(context_store=CustomContextStore())

Logging

Enable global logging for better debugging:

quickstart(logger=True)

Spellbook Integration

Spellbook provides a real-time web interface for monitoring and interacting with your arkaine tools and agents. It consists of two main components:

-spellbook_socket - a socket server that broadcasts tool/agent events and accepts commands. This is hosted by your agent program.

-spellbook_server - a web interface for visualizing execution, debugging, and triggering tools. This can be ran separately via spellbook or from within your agent program.

Configure Spellbook socket and server:

from arkaine.quickstart import quickstart # Enable both with default ports quickstart(spellbook_socket=True, spellbook_server=True) # Specify custom ports quickstart(spellbook_socket=8001, spellbook_server=8002) # Use custom instances from arkaine.spellbook.socket import SpellbookSocket from arkaine.spellbook.server import SpellbookServer quickstart( spellbook_socket=SpellbookSocket(port=8001), spellbook_server=SpellbookServer(port=8002) )

Cleanup

The function returns a cleanup callable that should be called when you're done:

done = quickstart( context_store="path/to/store", logger=True, spellbook_socket=True, spellbook_server=True ) # Your code here... # Clean up when finished done()

Note that cleanup is also automatically registered for program exit and signal handlers (SIGTERM/SIGINT).

keep_alive

Perhaps you need your application to run forever until it's told to exit - to make life easier for you we also provide keep_alive, a helpful.

from arkaine.quickstart import quickstart, keep_alive done = quickstart() # Your code here... keep_alive(done)

keep_alive will call done when the program is terminated via a SIGTERM or SIGINT. It also accepts other functions, so if you have your own cleanup logic you want to trigger for a proper shutdown:

from arkaine.quickstart import quickstart, keep_alive done = quickstart() # Your code here... keep_alive([done, my_cleanup_func], force_timeout=10.0)

All cleanup functions are simultaneously called in parallel to prevent blocking. The force_timeout parameter is optional and defaults to 3 seconds; this is telling the shutdown procedure to forcibly exit the program after that amount of time, irregardless if the cleanup functions have completed.